July 4, 2023

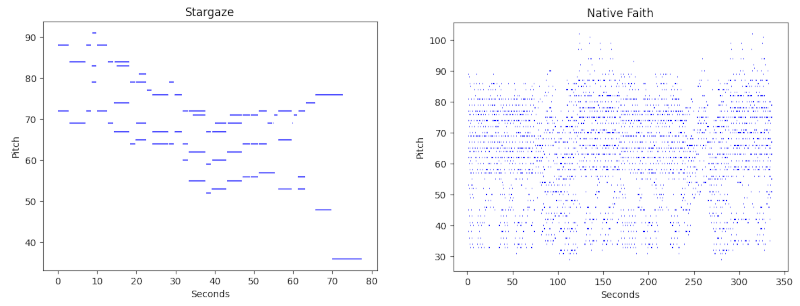

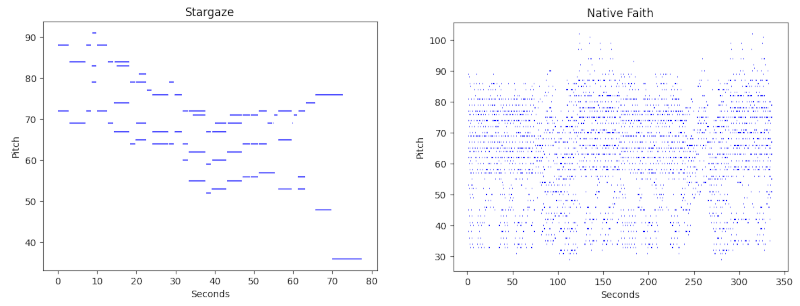

For the past several months I’ve been trying to make music with a neural network. After trying many different concepts, I settled on something very similar to Music Transformer. Here are some somewhat curated samples:

They’re not great, but that’s fine. It’s a lot better than nothing, which is what I was getting when I first started.

If you read the Music Transformer paper then you’ll understand most of it. Things I do differently:

I made all notes into whole, half, quarter, eighth, or sixteenth notes, or dotted versions of these. There are also a couple of special durations that are meant to signify “a really short note” and “a really long note.” MIDI doesn’t have a concept of “quarter note,” so I had to sort of translate all of my training data. This works 99% of the time, but it can mess up songs with tempo changes.

The network decides which pitch to play next, and then decides how long to play it. The second step is handled by a separate, smaller, transformer stack. This makes inference clunky, but I like being able to condition on the new pitch.

In addition to the 88 keys of a keyboard, there’s also a special “pitch” that represents the passage of time. During inference, I prevent the model from emitting two of these consecutively.

I have my own curated dataset. It’s not just classical music, although that is necessarily a large chunk of it. I only have about 500 songs so far.

During training, I randomly change 5% of the input notes by 1 semitone. The idea is to make the model robust to small mistakes. (I also use random chromatic transposition, just like everyone else.)

I do a LayerNorm just after each key map, but otherwise don’t have any LayerNorms. I also use ReZero on every residual branch. I’ve done lots of experiments with the transformer architecture but most changes don’t seem to help or hurt at all.

All of the training data is in MIDI format. This is nice because MIDI is really simple and easy to work with. But on the other hand, MIDIs are really rare, so it’s hard to build up a big high-quality dataset. I’ve experimented with automatic MP3-to-MIDI conversion, but it’s not good. Basically I’ve just had to seek out MIDIs wherever they can be found. Shout out to Makinporing for saving the old MSPAF MIDI/sheet music stuff.

I don’t discriminate on the length or style of a song, or the number of instruments, so there’s a decent variety in my dataset. Of course, everything gets converted to a single-track piano, but almost every song still sounds decent in piano-only form.

I’d like to add another transformer stack to handle volume, but I haven’t decided how to discretize that yet. I also want to come up with ways to control the model’s output. I experimented a bit with temperature, but it just didn’t seem useful to me. Lower temperatures made the outputs monotonous, and higher temperatures resulted in gibberish. Eventually I’m thinking of running a server in the Colab notebook so I can control it with a GUI that I run locally.